Hello,

While a lot of time of 2.0 development has been spent on new features and quality of life,

we still take care of the smaller details and technical improvements.

Deterministic multithreading is hard

Recently a desync bug was reported to us involving the modding API and multiple Windows and Linux computers that the player was using. My first instinct was to blame the mod developer for doing something wrong but I've seen enough bug reports over the years to know dismissing one without first investigating is a bad idea and a great way to eat crow.

I could not reproduce the desync, but the player was able to with ease. At first I thought it was a Windows vs Linux issue (we've had many of those), but then the player was able to reproduce it with Linux on both computers. Next I thought it was a hardware problem, we've seen our share of faulty computers that just don't do what they're supposed to do. Convincing someone their hardware is broken is difficult and time consuming in the best scenarios.

After several failed attempts to reproduce the issue on my one computer, Boskid, using his multiple computers was able to. Being able to reproduce bugs locally is I guess around 90-95% of the time spent fixing reported bugs. With Boskid reproducing the issue on demand he was able to narrow it down to the fact the computers had a different number of CPU cores. With that newfound knowledge he was able to make a test that could be run on my single computer to reproduce the desync by artificially pretending I had less CPU cores than I did.

The problem ended up needing 4 different moving pieces to all come together to expose a threading determinism issue that has been in the game since I put it there in July 22, 2017.

- A mod needed to listen to the chunk generated event and change the tiles on a chunk when it happened.

- A mod needed to request several chunks be generated.

- A mod needed to force all requested chunks to be generated right now.

- The game needed to be run on two computers with a different number CPU cores.

The logic to generate chunks 'right now' would attempt to use all of your available CPU cores but was done in a way that the number of cores - when combined with the other moving pieces - would change the chunk generation results slightly.

The fix wasn't that complex to implement and will be in 2.0, but it just goes to show that multithreading is hard, deterministic multithreading even more so.

Multiplayer auto-pause

We have this feature of dedicated servers where when the last player disconnects the server automatically pauses. This works great and means you can leave a dedicated server up without worrying that the biters will eat your base when you're gone. Except, there are some edge cases that we never got around to handling (until now).

Case 1: When you join the auto-paused server it instantly resumes running - even if you haven't fully loaded into the game. It turns out, this was incredibly simple to address just for some reason it fell through the cracks over the years. For 2.0 auto-paused servers will stay paused until at least 1 player has fully loaded into the game.

Case 2: Joining players need to catch up to the running game. This works great for "normal" games and allows the existing players to continue without having to wait for the joining players. But, players run into issues with much larger maps, or slow internet connections. Someone joining might take 1, 2, or several minutes to download, load, and catch up to the server fully if it continues on without them. For 2.0 we've added an option to "auto-pause when a player is joining" which works exactly as it says.

Faster construction robot tasks

It feels like every other week someone is asking "why aren't my construction robots working?" and in the screenshot they provide there's a little alert showing "600 jobs missing materials/robots". The issue has been there since the beginning of robots and still today we don't have a nice solution for it.

The problem is, given a task construction robots need to do, the game needs to find the best robot from the logistic network that is in range to do that thing. The check for "is this logistic network in range of the entity" is O(Roboport-Count). We do not control how slow that's going to be because the player can build 1 Roboport, or 100,000, or as many as their computer can take. So we need to make sure that the operation doesn't grind the game to a halt while it runs. We do this by only processing a few construction robots tasks each tick.

In 1.1 the game will check construction jobs 3 times per game update. If it fails to find a robot it will stop for the current update. Alerts for failed attempts last for 10 seconds. So at 60 updates per second, and 10 seconds per alert, that means 600 alerts will be created before the first one times out. This is where the magic "600 jobs missing materials/robots" alert count comes from.

It has worked this way since as long as I've worked on Factorio and will likely keep working like that at some basic level. We can't just crank up the count of tasks checked with each update because we know that operation is potentially slow, and slower the more roboports a player builds.

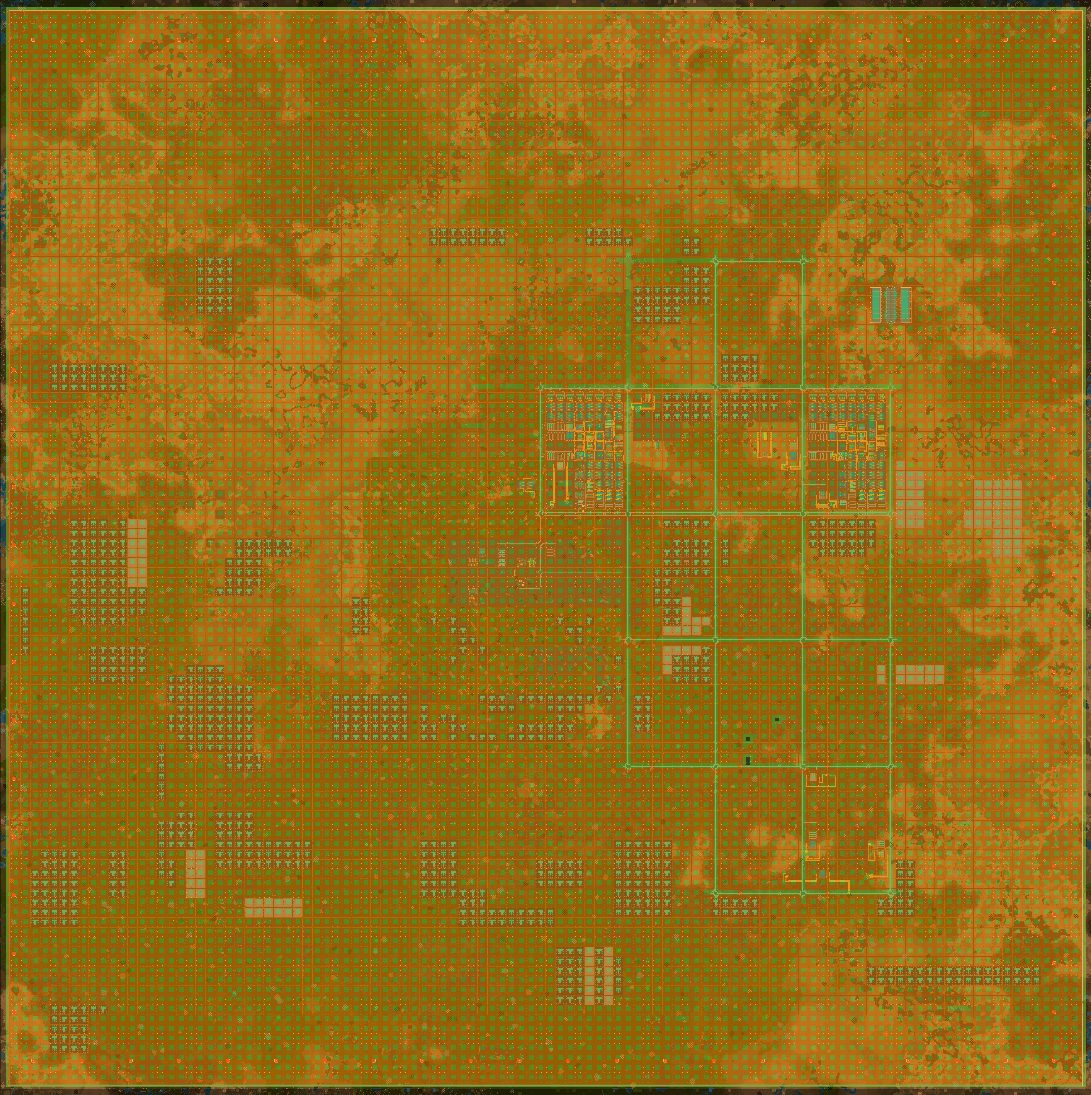

Earlier this year someone (forum post) ran across this issue again, but they decided to "solve" it themselves by doing what we said we can't do: "crank up the checked tasks per update" except they went from 1 per update to 374. Also they built 36 815 roboports. It was, as predicted, slow.

But that got me wondering, what if I could make the slow part a lot faster? Like change-the-algorithm faster? Inspiration hit me when I remembered that we have a set of logic in the game already to take the roboport logistic and construction areas, and compute the resulting rectangle union. In simple terms what it does is produce a nice set of deduplicated rectangles that cover the total area for all of the roboports. We use this for rendering to avoid a lot of over-draw when roboports are near each other.

That, combined with sorting the resulting rectangles meant I could do a simple binary search to check if a given task was within the network area. In the end the check went from O(N) on 36,815 roboports, to O(N) on 900~ rectangle union areas, to O(logN) on 900~ rectangle union areas.

The time to check "is it in this network" went from expensive to essentially free. With that speedup I was able to crank up the speed tasks get checked for 2.0 and hopefully put this issue to rest.

As always, report your thoughts at the usual places.